#Database Schema

Explore tagged Tumblr posts

Text

Best Practices for Managing Django Migrations

Introduction:Django migrations are a powerful tool for managing database schema changes in your Django projects. They allow you to evolve your database schema over time while preserving data integrity and minimizing disruptions. However, as your project grows, managing migrations can become complex and challenging. Adopting best practices for handling Django migrations ensures that your…

0 notes

Text

absolutely unintelligeable meme I made during bootcamp lecture this morning

#coding#data engineering#transformers#starscream#star schema#data normalisation#so the lecture was on something called 'star schema' which is about denormalising some of your data#(normalising data is a data thing separate meaning from the general/social(?) use of the word#it has to do with how you're splitting up your database into different tables)#and our lecturers always try and come up with a joke/pun related to the day's subject for their zoom link message in slack#and our lecturer today was tryna come up with a transformer pun because there's a transformer called starscream (-> bc star schemas)#(cause apparently he's a transformers nerd)#but gave up in his message so I googled the character and found these to be the first two results on google images and I was like#this is a meme template if I've ever seen one and proceeded to make this meme after lecture#I'm a big fan of denormalisation both in the data sense and in the staying weird sense

24 notes

·

View notes

Text

If I could make any change to Ao3, I would:

Distinguish between "major" and "minor" tags for characters/relationships, so that it's clear when a particular relationship is the whole point of a fic and when a particular relationship is merely incidental to/present in the fic. This would make filtering to find the desired type of fic much easier

Create a separate metadata field for canon-ness, with standardized options for "Canon Compliant" "Canon Divergent," and common Alternate Universes (i.e. "Alternate Universe - Omegaverse," "Alternate Universe - Coffee Shop," "Alternate Universe - Vampires"). Many authors do include this information in their tags, but it's often buried, and because there's not a controlled vocabulary, trying to limit to or exclude based on these factors is hit or miss

#ao3#archive of our own#I'm a librarian and I like elaborate metadata#Ao3 genuinely has one of the best metadata/filtering schemas of any major database#but there are Improvements That I Desire

5 notes

·

View notes

Text

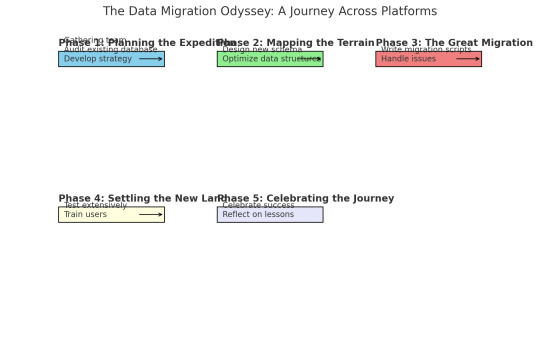

The Data Migration Odyssey: A Journey Across Platforms

As a database engineer, I thought I'd seen it all—until our company decided to migrate our entire database system to a new platform. What followed was an epic adventure filled with unexpected challenges, learning experiences, and a dash of heroism.

It all started on a typical Monday morning when my boss, the same stern woman with a flair for the dramatic, called me into her office. "Rookie," she began (despite my years of experience, the nickname had stuck), "we're moving to a new database platform. I need you to lead the migration."

I blinked. Migrating a database wasn't just about copying data from one place to another; it was like moving an entire city across the ocean. But I was ready for the challenge.

Phase 1: Planning the Expedition

First, I gathered my team and we started planning. We needed to understand the differences between the old and new systems, identify potential pitfalls, and develop a detailed migration strategy. It was like preparing for an expedition into uncharted territory.

We started by conducting a thorough audit of our existing database. This involved cataloging all tables, relationships, stored procedures, and triggers. We also reviewed performance metrics to identify any existing bottlenecks that could be addressed during the migration.

Phase 2: Mapping the Terrain

Next, we designed the new database design schema using schema builder online from dynobird. This was more than a simple translation; we took the opportunity to optimize our data structures and improve performance. It was like drafting a new map for our city, making sure every street and building was perfectly placed.

For example, our old database had a massive "orders" table that was a frequent source of slow queries. In the new schema, we split this table into more manageable segments, each optimized for specific types of queries.

Phase 3: The Great Migration

With our map in hand, it was time to start the migration. We wrote scripts to transfer data in batches, ensuring that we could monitor progress and handle any issues that arose. This step felt like loading up our ships and setting sail.

Of course, no epic journey is without its storms. We encountered data inconsistencies, unexpected compatibility issues, and performance hiccups. One particularly memorable moment was when we discovered a legacy system that had been quietly duplicating records for years. Fixing that felt like battling a sea monster, but we prevailed.

Phase 4: Settling the New Land

Once the data was successfully transferred, we focused on testing. We ran extensive queries, stress tests, and performance benchmarks to ensure everything was running smoothly. This was our version of exploring the new land and making sure it was fit for habitation.

We also trained our users on the new system, helping them adapt to the changes and take full advantage of the new features. Seeing their excitement and relief was like watching settlers build their new homes.

Phase 5: Celebrating the Journey

After weeks of hard work, the migration was complete. The new database was faster, more reliable, and easier to maintain. My boss, who had been closely following our progress, finally cracked a smile. "Excellent job, rookie," she said. "You've done it again."

To celebrate, she took the team out for a well-deserved dinner. As we clinked our glasses, I felt a deep sense of accomplishment. We had navigated a complex migration, overcome countless challenges, and emerged victorious.

Lessons Learned

Looking back, I realized that successful data migration requires careful planning, a deep understanding of both the old and new systems, and a willingness to tackle unexpected challenges head-on. It's a journey that tests your skills and resilience, but the rewards are well worth it.

So, if you ever find yourself leading a database migration, remember: plan meticulously, adapt to the challenges, and trust in your team's expertise. And don't forget to celebrate your successes along the way. You've earned it!

6 notes

·

View notes

Text

Finally registered for classes this morning!

#didn’t get what I most wanted which was a database management systems course#I might try for it during add drop but I also might just accept I’ll have to take it next fall and won’t get to take data mining/analytics#we will see#but I did get my core course I most wanted which was about information organization meaning metadata schemas and such things#which I’m stupidly hyped for lmfao

4 notes

·

View notes

Text

Data Unbound: Embracing NoSQL & NewSQL for the Real-Time Era.

Sanjay Kumar Mohindroo Sanjay Kumar Mohindroo. skm.stayingalive.in Explore how NoSQL and NewSQL databases revolutionize data management by handling unstructured data, supporting distributed architectures, and enabling real-time analytics. In today’s digital-first landscape, businesses and institutions are under mounting pressure to process massive volumes of data with greater speed,…

#ACID compliance#CIO decision-making#cloud data platforms#cloud-native data systems#column-family databases#data strategy#data-driven applications#database modernization#digital transformation#distributed database architecture#document stores#enterprise database platforms#graph databases#horizontal scaling#hybrid data stack#in-memory processing#IT modernization#key-value databases#News#NewSQL databases#next-gen data architecture#NoSQL databases#performance-driven applications#real-time data analytics#real-time data infrastructure#Sanjay Kumar Mohindroo#scalable database solutions#scalable systems for growth#schema-less databases#Tech Leadership

0 notes

Text

sometimes programming feels like beating the computer with a stick. do! what! i! want!! fuck!!!

#tütensuppe#in this case its the database#database guy migrated the data to a new schema without the gamebreaking issue#now none of my queries work anymore!!#so i was moving minor parts around trying to get it to work and then had to admit i cant figure this one out myself#every query gives 2360 results instead of one and i dont know why. whatever

1 note

·

View note

Text

Database Schema Migrations Using ColdFusion and Liquibase

#Database Schema Migrations Using ColdFusion and Liquibase#Database Schema Migrations Using ColdFusion#Database Schema Migrations Using Liquibase

0 notes

Text

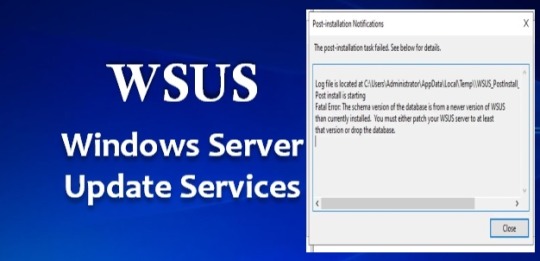

The schema version of the database is from a newer version of wsus

The WSUS installation from Server Manager fails with a fatal error stating, “The schema version of the database is from a newer version of WSUS than currently installed.” This error requires either patching the WSUS server to at least that version or dropping the database. Windows update indicates that the system is up to date. Please see how to delete ADFS Windows Internal Database without…

View On WordPress

#“WSUS Post-deployment Configuration Failed#Microsoft Windows#Remove Roles and Remove features#The schema version of the database#When prompted with the "Remove Roles and features Wizard"#Windows Internal Database (WID)#Windows Server 2012#Windows Server 2016#Windows Server 2019#Windows Server 2022#Windows Server 2025#WSUS#WSUS Database#WSUS Updates (Windows Server Update Services

0 notes

Text

Searching for a Specific Table Column Across All Databases in SQL Server

To find all tables that contain a column with a specified name in a SQL Server database, you can use the INFORMATION_SCHEMA.COLUMNS view. This view contains information about each column in the database, including the table name and the column name. Here’s a SQL query that searches for all tables containing a column named YourColumnName: SELECT TABLE_SCHEMA, TABLE_NAME FROM…

View On WordPress

#cross-database query#database schema exploration#Dynamic SQL execution#find column SQL#Results#SQL Server column search

0 notes

Text

database schema online softfactory,Extremely easy to use, really fragrant!!!

“If you desire a high-performance database that operates with rapid search, then database design is indispensable. Spending time on database design will help you avoid issues of inefficiency and high redundancy. ”

The online database design software softfactory, with a clear and beautiful interface and simple functions without excessive settings, is easy to use and can make the construction and optimization of database design more relaxed, from tables to diagrams, filled with a sense of detail.

Visual Data Tools

Softfactory integrates three practical tools: 1. Table design: Users can make AI tables or manually create tables. Once the table structure design is completed, you can set the code language to generate CRUD code and push it to your local development tools. 2. Table structure generation: Firstly, you can obtain the DDL of the table, view and copy it in the preview window and designer. Secondly, CRUD code can be generated based on the table structure, by specifying the language of the code, and it can be pushed to the local through the development tool plugin. 3. Diagram: Tables dragged in can be automatically sorted and laid out by foreign keys. Those without foreign key relations can also be manually connected and deleted.

Version Control

It is possible to copy the entire table to a branch, supporting branch management and creating snapshots of tables for iterative development and backup.

Team Collaboration

Supports sharing and team collaboration, users can invite specified personnel or contact methods such as email through the shared link, and team members can share access permissions.

Simplify Team Development Process

Softfactory allows teams to easily collaborate to create and maintain diagrams and tables online. Real-time display of table structure changes, eliminates the need for manual synchronization of diagram files between different developers and offline tools, and puts an end to the chaos of multiple people maintaining table structures.

Whether you are an individual developer or team collaboration, whether you are a beginner or a professional, softfactory can provide you with the most suitable solution. Therefore, if you are looking for a powerful, easy-to-use, efficient, and reliable online table structure design tool, then softfactory is undoubtedly your second to none choice.

0 notes

Text

Installing HR sample schema script in Oracle Linux platform

Script to setup HR sample schema on Oracle Linux platform On Linux machine, go to the following directory where oracle home has Human Resources script while installing [oracle@Linux1 /]$ cd $ORACLE_HOME/demo/schema/human_resources [oracle@Linux1 human_resources]$ Connect with the database with sqlplus command sqlplus / as sysdba Connected to: Oracle Database 19c Enterprise Edition Release…

View On WordPress

0 notes

Text

Retro-Engineering a Database Schema: GPT vs. Bard vs. LLama2 (Episode 2)

Exciting news! In my latest blog post, I dive into the world of database retro-engineering and compare the performance of three AI models: GPT, Bard, and the new player on the block, LLama-2. 🚀 This article discusses how LLama-2 analyzes a dataset and suggests a database schema with separate tables for different categories. It successfully identifies categorical and confidential columns, providing valuable insights for data analysis. 💡 Curious about the results? Click the link below to read the full blog post and learn about Llama-2's performance and areas for improvement. 📖 [Read more here](https://ift.tt/SosADt0) Don't miss out on the latest trends in database retro-engineering! Stay informed and unlock valuable insights for your data-driven projects. #DataScience #AI #DatabaseRetroEngineering List of Useful Links: AI Scrum Bot - ask about AI scrum and agile Our Telegram @itinai Twitter - @itinaicom

#itinai.com#AI#News#Retro-Engineering a Database Schema: GPT vs. Bard vs. LLama2 (Episode 2)#AI News#AI tools#Innovation#itinai#LLM#Pierre-Louis Bescond#Productivity#Towards Data Science - Medium Retro-Engineering a Database Schema: GPT vs. Bard vs. LLama2 (Episode 2)

0 notes

Text

Architecture design is a must while developing a SaaS application to ensure its scalability and optimising infrastructure costs.

0 notes

Note

genuinly very curious- how full is your submission backlog? i'm sure y'all get quite a few submissions pretty regularly, i'm just curious how many y'all have to sort through

Our backlog is about three weeks long, although it varies quite a bit - e.g., we get way more submissions on Sundays and Mondays since the majority of games happen on the weekend, and we also tend to get more submissions right after holidays and around the end of summer vacation / beginning of fall.

There's also a technical component due to Tumblr's.... let's call it unconventional back-end infrastructure / database schema, which has an unfortunate tendency to hide random submissions until mystical unknown criteria are met. Which is why we sometimes post quotes that were submitted months ago - they've finally been released from the depths of Tumblr's databases into our inbox.

197 notes

·

View notes